Newsletter Subscribe

Enter your email address below and subscribe to our newsletter

In the ever-evolving world of technology, the race to build the fastest and smartest Artificial Intelligence (AI) tools is heating up. When you interact with ChatGPT, particularly through the GPT-4 model, it’s apparent that the model takes a while to reply to queries.

Additionally, voice assistance that leverages substantial language models, such as ChatGPT’s Voice Chat or the newly launched Gemini AI, exhibits this characteristic too.

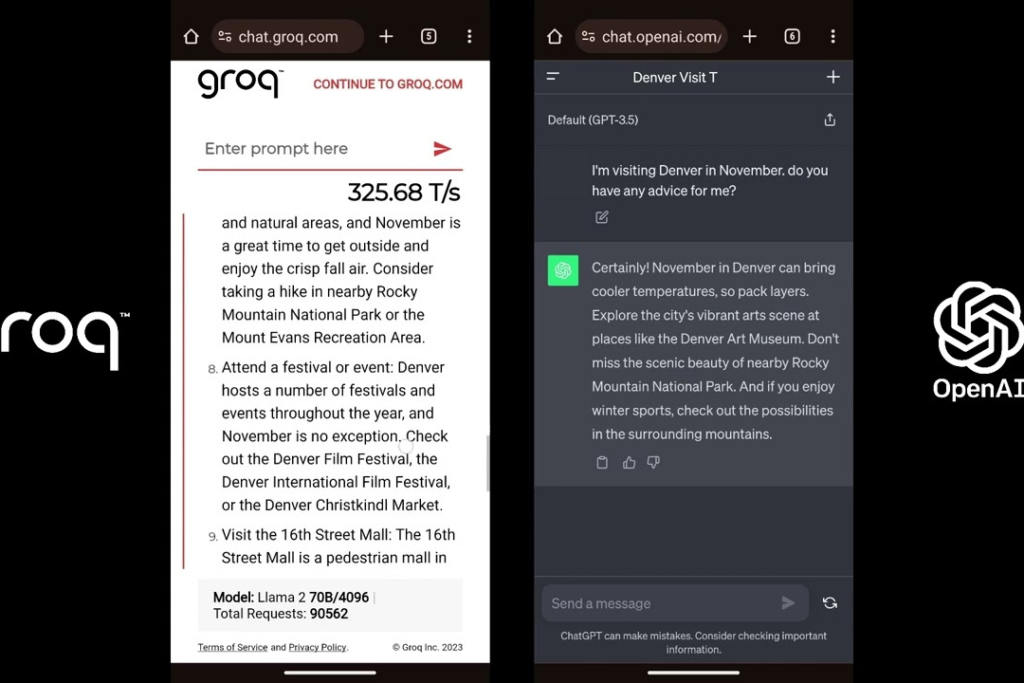

Groq, a new name on the block, is set to revolutionize how we approach AI acceleration. Unlike its popular counterparts, ChatGPT and Gemini, Groq isn’t just another AI model; it’s a supercharged AI accelerator designed to boost computational speed and efficiency to unprecedented levels.

This breakthrough technology aims to propel AI applications forward, making processes faster and more reliable.

This article aims to explore Groq’s innovative technology, its potential impact on the AI landscape, and how it might eclipse established players such as ChatGPT and Gemini, in an exploration of next-generation computational excellence. Let’s dive in!

Groq is an AI startup focused on creating ultra-efficient, high-performance computing hardware. At its core is a unique semiconductor design that emphasizes simplicity, speed, and determinism.

Unlike traditional architectures that depend heavily on complex control logic and prediction mechanisms, Groq’s approach aims to eliminate inefficiencies, thereby significantly accelerating data processing tasks.

The architecture revolves around the Groq Tensor Streaming Processor (TSP), designed specifically for machine learning operations. This TSP enables the execution of AI-related tasks at breakneck speeds, promising to make operations like natural language processing, image recognition, and real-time analytics faster and more energy-efficient.

Read Also – Elon Musk Not Impressed with OpenAI’s Latest Model Sora

Delving into the heart of Groq’s technological innovation, we uncover the Language Processing Unit (LPU), a groundbreaking creation designed to turbocharge artificial intelligence (AI) operations. This bespoke component marks a radical departure from the norm, setting a new benchmark in the AI industry.

Traditional computing solutions for AI, notably Graphics Processing Units (GPUs), while effective, have limitations in handling the intricate demands of modern AI tasks. In stark contrast, Groq’s LPU emerges as a beacon of efficiency and speed, redefining the landscape of computational hardware.

This specialized unit is engineered from the ground up with the singular aim of accelerating AI workloads, showcasing exceptional prowess in executing tasks at lightning speeds.

In summary, Groq’s Language Processing Unit is not just an advancement; it’s a reimagining of how hardware can propel AI development forward. By prioritizing speed, efficiency, and specificity, the LPU is poised to lead the charge toward a new horizon in AI technological advancement.

Groq’s potential to outperform heavyweights like ChatGPT and Gemini lies in its groundbreaking speed and efficiency. By drastically reducing the time required to process complex AI tasks, Groq could enable near-instantaneous responses in AI-driven applications, thereby setting a new standard in performance.

Another aspect where Groq shines is its scalability. Its architecture is designed to be linearly scalable, meaning that performance improvements are predictable and straightforward as more TSPs are added.

This scalability can be especially beneficial in the deployment of large AI models, where Groq’s technology could significantly lower the barrier to entry in terms of both cost and complexity.

In an era where sustainability is becoming increasingly important, Groq offers an enticing proposition by potentially lowering the energy consumption of AI operations. Its efficient design could help reduce the carbon footprint associated with running large AI models, a compelling advantage over its rivals.

ChatGPT, OpenAI’s flagship conversational AI model, and Gemini, a sophisticated AI model for creating text in various languages, both require tremendous processing resources to function effectively.

As these models get more sophisticated and capable of handling increasingly complicated tasks, the demand for quicker inference speeds grows dramatically.

Groq’s AI accelerator addresses this demand for speed. Groq uses its groundbreaking architecture to accelerate AI computations to unprecedented levels, allowing ChatGPT and Gemini to process massive volumes of data in real-time.

Groq’s technology stands out in the world of Artificial Intelligence (AI) applications because of how it’s built to tackle AI’s big appetite for speed and efficiency. Imagine you have a task that normally takes a lot of time and effort, like sorting through a massive library to find all books written by a specific author.

Now, imagine if you had a super-efficient, speedy method that could find and organize those books in no time. That’s akin to what Groq’s technology does for AI.

Here’s why Groq’s tech is especially suited for AI:

At the heart of AI applications, especially those involving learning and adapting (called machine learning), there’s a need to process a vast amount of data quickly. Groq’s chips are like brainy speedsters. They can handle a massive number of calculations at lightning speed, making them perfect for the heavy lifting required by AI tasks.

Imagine a factory where each worker knows exactly what to do when to do it, and doesn’t waste time moving around. Groq’s architecture is somewhat similar. It’s designed to streamline processes, reducing the back-and-forth that typically happens in computer chips. This means data is processed more efficiently, saving time and energy.

In computing, being deterministic means you can expect the same outcome if you repeat a process under the same conditions. Groq’s technology is deterministic, which is a big deal for AI. It ensures that AI applications can run predictably and consistently, which is crucial for reliability and trust in systems like autonomous vehicles or financial modeling.

Instead of being a jack-of-all-trades, Groq’s chips are like master craftsmen specialized in AI tasks. This specialization allows them to excel at specific functions required by AI, such as tensor operations (a kind of mathematical operation that’s common in machine learning). It’s like having a tool designed to do one job well, making the entire process more efficient.

Also Read – OpenAI Launches Sora, an AI tool that Creates 1-minute Videos

Taking over might sound like a direct competition, but it’s more of an evolution. It’s not just about running the same race faster; it’s about changing the game. If Groq’s technology proves to be as revolutionary as it claims, it could redefine how AI services like ChatGPT and Gemini operate, making them even more powerful and efficient.

This doesn’t necessarily mean Groq will replace these tools; rather, it might enhance the infrastructure that makes these AIs smarter and more responsive.

However, technology is only one component of the solution. The adoption of Groq’s innovations will depend on factors like ease of integration, cost, and the actual performance gains in real-world applications. Also, the AI field is known for its rapid advancement, and companies like OpenAI are continuously evolving, potentially narrowing the window for Groq to make a significant impact.

Think of Groq’s products as really high-performance engines designed to power through specific tasks much faster and more efficiently than your average computer. So, who can benefit from using Groq? Let’s take a look:

These are the guys who are always on the lookout for the next big thing in tech. For businesses that focus on AI, like developing smart assistants or sophisticated recommendation systems (think of how Netflix suggests movies), Groq’s products can offer the high speed and efficiency they need.

Scientists and researchers who are crunching a huge amount of data to, say, map the human genome or understand climate change patterns, could see significant advantages in using Groq’s technology. It can dramatically speed up their work, helping them find answers and make discoveries much faster.

Hospitals and healthcare companies that use AI to analyze medical data, such as MRI images or large-scale health records, could greatly benefit. Groq can help process this information quickly and accurately, aiding in faster diagnoses and personalized medicine.

Firms in finance, like banks or insurance companies, use AI for things like fraud detection or analyzing market trends. Groq’s technology can process vast amounts of financial data at high speeds, making these analyses more efficient and potentially more accurate.

Companies working on self-driving cars need to process a ton of data from sensors in real time to make immediate decisions on the road. Groq’s products could be key to making autonomous vehicles safer and more reliable.

For businesses that deal in digital content, like video streaming or video game development, Groq’s capabilities can help in faster processing of graphics and data, ensuring a smooth and immersive user experience.

Overall, anyone dealing with complex computing tasks, especially those related to AI and large datasets, stands to benefit from what Groq offers. From speeding up research to improving services and products across a variety of fields, the potential applications are vast.

Read Also – Undress AI: the Popular App that Undresses Women and the Need to Regulate it

Overall, it’s an exciting development in the AI area, and the arrival of LPUs will allow consumers to interact with AI systems instantly. The considerable reduction in inference time allows users to interact with multimodal systems quickly while speaking, feeding images, or generating images.

Groq is already providing developers with API access, so AI model performance should improve significantly shortly. So, what are your thoughts on the evolution of LPUs in the AI hardware space?

As AI technology advances, the function of AI accelerators such as Groq becomes increasingly important. Groq’s exceptional speed, efficiency, and scalability position it to revolutionize the capabilities of AI models like ChatGPT and Gemini.

While the thought of Groq taking over ChatGPT and Gemini raises concerns about the future of these AI platforms, it also opens up new opportunities. By leveraging Groq, developers may create new applications, improve existing services, and push the boundaries of AI innovation.

This alliance promises not only to refine the efficiency and output of AI-driven solutions but also to catalyze the birth of novel concepts and applications once thought to be within the realm of science fiction. The future beckons with promises of a smarter, more intuitive technological landscape made possible by the synergy between Groq and the pioneers of artificial intelligence.

Meet Groq, the new kid on the block in the world of AI accelerators. It’s designed to make AI tasks run super fast, possibly even outpacing the current leaders.

Imagine a turbocharged engine for AI—that’s Groq for you. It’s built to handle complex computations at lightning speed, which is crucial for AI technologies to work smoothly and efficiently.

While ChatGPT and Gemini have been the go-to for many, Groq’s entry might just mix things up. It’s like having a new super-fast car in a race dominated by speed—exciting times ahead for AI technology!

Groq’s AI accelerator stands out for its simplicity, efficiency, and unparalleled speed. It utilizes a unique architectural approach that enables deterministic computing, eliminating the unpredictability often seen in other systems.

While specific details may vary over time, Groq has actively sought partnerships across various sectors, including healthcare, finance, and cloud computing.

For those interested in Groq’s technology or explore potential collaborations, visiting Groq’s official website is a good starting point. The site provides detailed information about their products, technology, and how they can be applied to various industries.

As of the last update, Groq works with select partners and clients to integrate its AI accelerators into their systems. The company has been active in expanding its availability, though specific details regarding public access and widespread distribution may vary.