Physical Address

60 Ekwema Cres, Layout 460281, Imo

Physical Address

60 Ekwema Cres, Layout 460281, Imo

Meta, the founding company of Instagram and Facebook Messenger, is pushing out changes to enhance the protection of minors online.

There has been an onslaught of predators preying on children younger than 18, this has been a cause of concern. This prompted Meta to produce new updates that can put the preying on minors in check.

The new updates aim to create a safer environment for young users by placing greater restrictions on who can message them and giving parents more control over their children’s security settings.

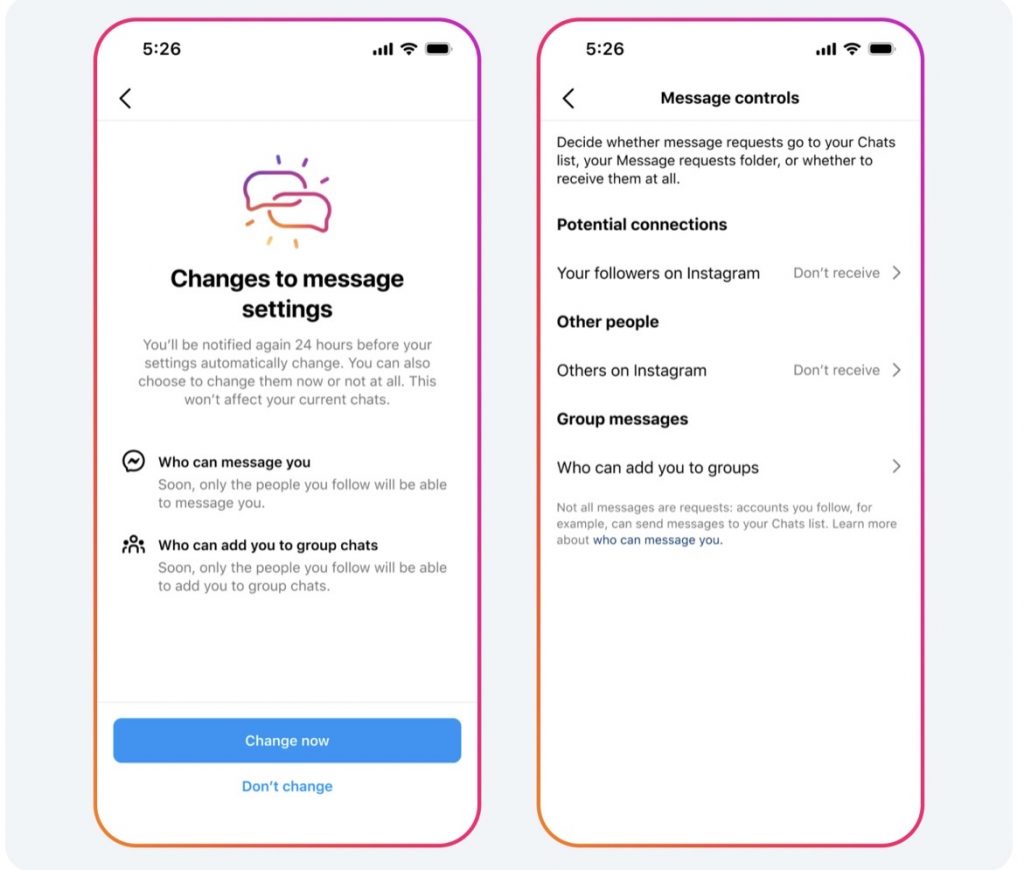

Now, teens under the age of 16 (or under 18 in certain countries) won’t receive messages or be added to group chats by users they don’t follow or aren’t connected with on Instagram and Messenger.

It’s all about prioritizing their well-being and safeguarding them from potential risks. Safety is always a top priority, especially in the digital world.

These updates come as Meta responds to concerns that its algorithms have allowed predators to target children on Facebook and Instagram.

The company has been actively introducing safeguards to address these issues. Unlike previous restrictions, which only applied to adults over 19 messaging minors who don’t follow them, these new rules apply to all users, regardless of age.

Instagram users will receive a notification about the change at the top of their Feed. Teens using supervised accounts will need permission from their parents or guardians to modify this setting.

To enhance parental supervision, Instagram is expanding its tools. Instead of simply being notified when their child makes changes to safety and privacy settings, parents will now be prompted to approve or deny these requests, preventing their child from switching their profile from private to public without consent.

Meta is also developing a new feature to protect users from receiving unwanted or inappropriate images in messages from people they’re already connected with.

This feature will work in encrypted chats and aims to discourage the sending of such content altogether. While there’s no specific launch date yet, Meta plans to share more information later this year.

These updates demonstrate Meta’s commitment to ensuring a safer online experience for young users and empowering parents to actively participate in their children’s digital lives. It’s all about fostering a secure and positive environment for everyone.